Spikes, short electrical pulses traveling between neurons, are the basis of information transmission in mammalian brains. Besides the total number of spikes in a given time interval (also referred to as the “firing rate” of a neuron), a significant part of the information is encoded in their relative timing. This marks a fundamental difference to typical machine learning models, such as artificial neural networks, where computational units communicate solely via firing rates.

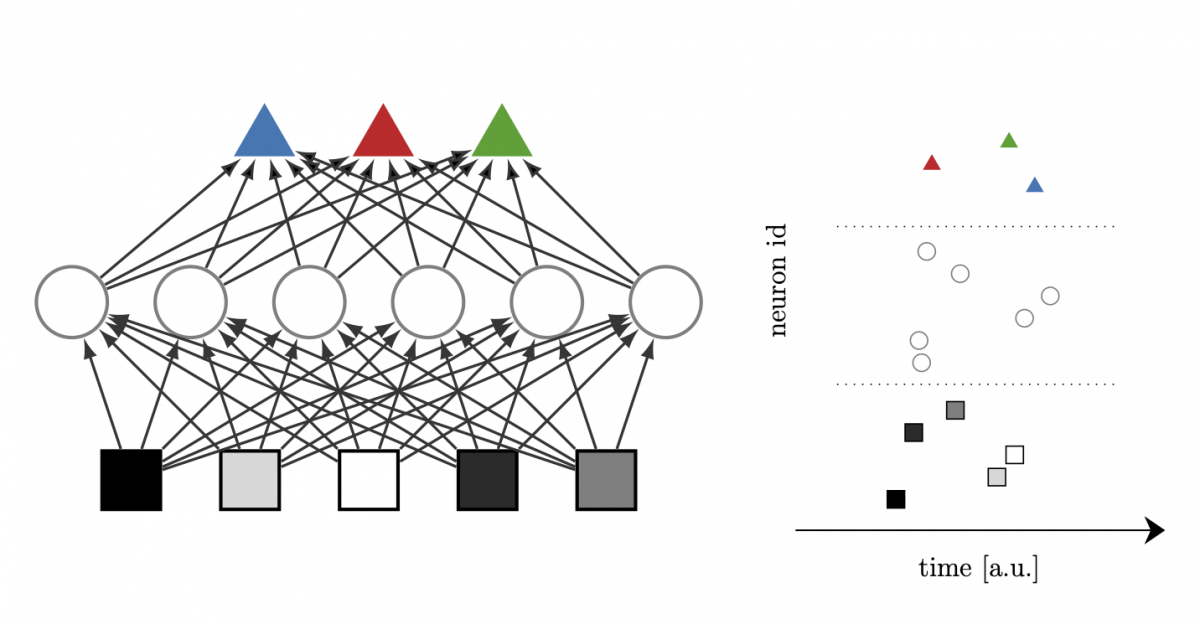

We have developed a new learning algorithm which efficiently solves pattern classification problems by making full use of spike timing. The algorithm is built on a rigorous mathematical description of spike times in biologically-inspired neuron models as a function of their inputs. This makes it possible to precisely quantify the effect of input spike times and synaptic strengths, which in turn allows computing this effect throughout networks of multiple layers (Figure 1), necessary for efficient credit assignment, i.e., adjusting synaptic weights throughout the network to reduce the mismatch between desired and actual output spike times, similar to the famous backpropagation of errors algorithm.

Figure 1: (Left) Classification network consisting of neurons (squares, circles, and triangles) grouped in layers. Information is passed from the bottom to the top, e.g. pixel brightness of an image. Here, a darker pixel is represented by an earlier spike. (Right) Each neuron spikes no more than once, and the time at which it spikes encodes the information.

Our new algorithm is particularly suited for neuromorphic systems such as the EBRAINS BrainScaleS 2 (BSS2) platform. The BSS2 chip houses 512 physical neurons which can communicate via a configurable digital network. Neuromorphic architectures are optimized for spike-based communication, combining a massive acceleration with respect to realtime (1000x speed-up in the case of BSS2) with significantly lower energy consumption than conventional computers. Neuromorphic systems thus are attractive both as a research platform as well as for deployment in low-power IOT devices.

BSS2 achieves accelerated emulation and low energy by using physical representations of quantities such as the neuronal membrane potential, instead of digital representations used in conventional computers. Unfortunately, physical representations are susceptible to noise. Additionally, due to production imperfections the circuits of individual neurons are not identical, causing variability in neuron parameters. It is difficult to predict the impact of these effects on neuromorphic algorithms. By digitally simulating networks with similar, but well controlled, perturbations we can gain insights into these effects.

Detailed exploration of these effects by digital simulation is computationally expensive, in particular for large networks. For these simulations, computing resources of the Piz Daint supercomputer at CSCS (Lugano, Switzerland) using the ICEI allocation for the Human Brain Project (HBP) were invaluable. In particular, being able to simultaneously run a large number of parallel simulations allowed us to systematically investigate the influence of various hardware-specific perturbations on learning performance. In these studies, our algorithm showed a high degree of stability when faced with, e.g., neuron parameter variability, increasing confidence in the suitability of our algorithm on a wide range of neuromorphic platforms.

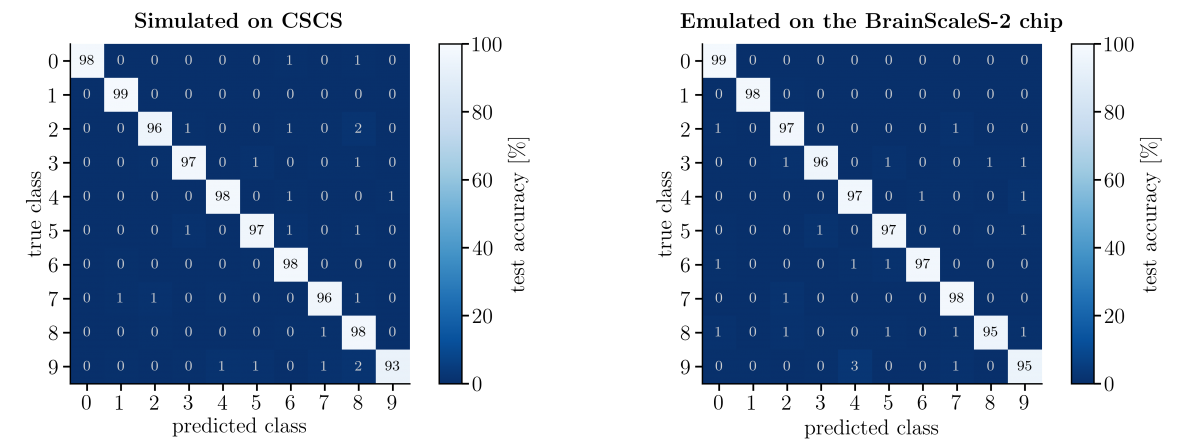

Relying on our results gathered from software simulations, we were able to realize an implementation of our algorithm on the neuromorphic platform BrainScaleS-2. We trained a network to classify the MNIST handwritten digits data set, achieving similar performance to pure software implementations (Figure 2). However, compared to software simulations, the hardware implementation is much faster and more energy efficient: up to 10'000 images can be classified in less than a second consuming 8.4 µJ per image for a total power consumption of only 175 mW, comparable to the power consumption of a few off-the-shelf LEDs. Our hardware results provide a successful proof-of-concept implementation, highlighting the advantages of sparse, but robust coding combined with fast, low-power silicon substrates, with significant potential for edge computing and neuroprosthetics.

Figure 2: We studied the feasibility of our algorithm in simulations on the HPC system CSCS (left panel) before deploying it on the neuromorphic platform BrainScaleS 2 (right panel). Both panels show a confusion matrix of the classification results of a trained network on the MNIST test data set. In the ideal case, the matrix is zero everywhere except for the diagonal, and a clear diagonal is achieved in both cases. Notably, the left and right matrix are very similar, as is the final test accuracy (97.07% in simulation versus 96.97% in the emulation).

Acknowledgements

We would like to thank the Manfred Stärk foundation for ongoing support. This research has received funding from the European Union’s Horizon 2020 Framework Programme for Research and Innovation under the Specific Grant Agreement No. 945539 (Human Brain Project SGA3).

References:

1. J. Göltz, L. Kriener, et al. “Fast and deep: energy-efficient neuromorphic learning with first-spike times,” arXiv:1912.11443, 2019. https://arxiv.org/abs/1912.11443

2. Conference talks: long version https://www.youtube.com/watch?v=ygLTW_6g0Bg and short version https://www.youtube.com/watch?v=mAD_0zEsPCM

3. NeuroTMA group: https://physio.unibe.ch/~petrovici/group/

Please contact:

Julian Göltz

NeuroTMA group, Department of Physiology, University of Bern, Switzerland and Kirchhoff-Institute for Physics, Heidelberg University, Germany

julian.goeltz@kip.uni-heidelberg.de